Spiffy’s 3 pronged approach keeps your customers in control and ensures the integrity of your brand.

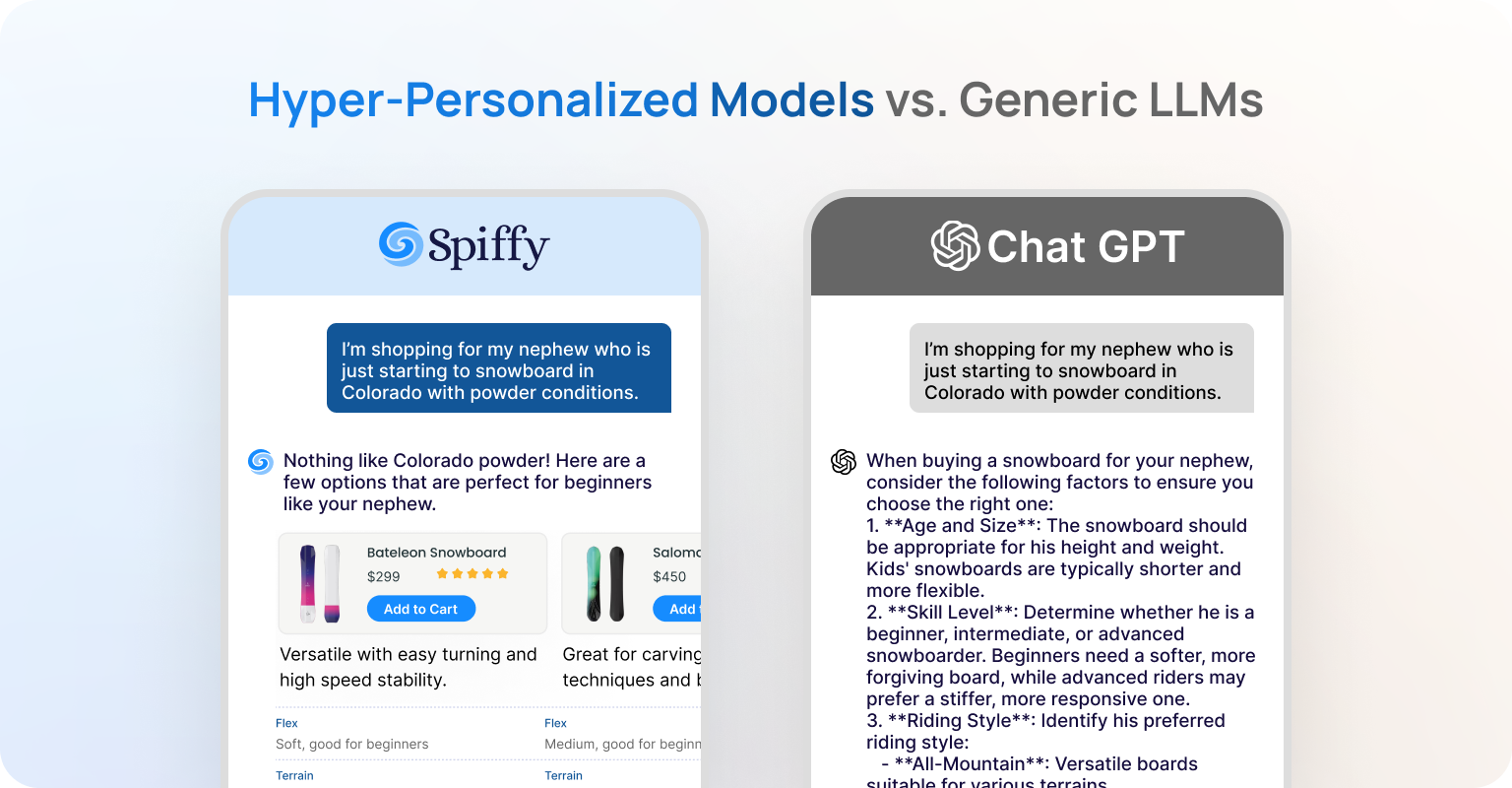

LLMs have stunned the world with their ability to generate text that’s remarkably similar to human-produced language, making them useful for analyzing vast datasets, automating tedious tasks, and more. Retailers specifically - whose #1 goal is to build enduring relationships with their customers - have their ears to the ground and are eager to learn how to use the technology to scale their interactions.

But haphazardly deploying AI products is a recipe for frustration in the best case—and PR nightmares in the worst.

Air Canada, for example, lost a lawsuit because its AI-powered chatbot mistakenly shared with a passenger the airline’s bereavement fare policy. Or consider the shopping platform Curated, whose experimental GPT-4-powered shopping assistant errantly told customers to buy products from other retailers.

These errors have substantial repercussions—that’s why Spiffy uses a three-pronged approach to ensure its customers are in control of every interaction so brand integrity remains intact.

1. Tailormade Models

Safety by design means starting from a foundation of high-quality data in order to fuel a positive feedback loop. LLMs such as GPT-4 are trained on the entire Internet, which is rife with errors and misinformation.

Your Spiffy AI models, on the other hand, are trained on your unique digital footprint, including user behavioral data (CDP), custom ML models, product catalog, order management system (OMS). And further, we deliberately ensure each input that goes into our models is high-quality. For example, refusals (the model refusing to do something) are often tacked on with prompting, which is easy to circumvent. We incorporated refusals natively into the training step, resulting in significantly enhanced performance and reliability against hallucinations.

Use your own brand content to train the model

2. Red Teaming

Red teaming is a process where we challenge a system to proactively identify and eliminate safety risks and undesirable behaviors by thinking like an adversary.

Generating harmful, discriminatory, or illegal responses is not acceptable. Here’s a look at the safety scores (0-100) for 10 popular LLMs, which many teams rely on for customer-facing AI.

A score of 99.18 might look strong. But when you deploy that AI across millions of customer interactions, you run the risk of thousands of unsafe experiences. That's why Spiffy’s AI engineers engage in red teaming, a process where we challenge a system to proactively identify and eliminate safety risks and undesirable behaviors by thinking like an adversary.

As a result, we have a safety score of 100.00, making Spiffy the safest consumer grade AI as evidenced by industry benchmarks.

3. Consumer Grade AI

Today’s commercial AI, like OpenAI’s GPT-4, can be prompted to generate text that mimics human interaction—but mimicking is not the same as truly understanding a company's values, tone, and audience.

Prompting an LLM to produce a specific output is like learning to play the Star Wars theme on a piano by memorization, a short-term solution. In contrast, alignment involves fundamentally retraining the LLM, akin to teaching it to understand music theory and composition, resulting in a skill that is enduring and adaptable across contexts.

Spiffy protects against all funky or nefarious prompts.

In other words, alignment is the process of deeply integrating human values into LLMs to make them as helpful, truthful, and safe as possible, ensuring they act in ways that genuinely reflect user expectations and company standards.

This distinction is why the Air Canada customer service AI mistakenly informed a customer that he could receive a discount under the airline's bereavement policy after the purchase. This miscommunication, resulted in a financial loss and legal repercussions for Air Canada, could have been avoided through alignment.

Alignment is crucial for making an LLM that is ‘consumer grade’ with 100% predictable behavior. Spiffy’s alignment interface enables you to adapt model behavior, for example, retraining it to embody your brand values, act on seasonal trends, or know when to upsell based on purchasing behavior.

We squarely focused on helping your team meet and exceed goals safely. We hope the above summary helps demystify how Spiffy is approaching safety and gives you confidence that our approach is best-in-class.